Not quite fake news: Twitter accounts amplify old stories to sow discord

Researchers are tracking a new kind of social media influence operation apparently meant to inflame Twitter users by repackaging old news and amplifying divisive content.

More than 215 social media accounts have reposted news about terrorism, racism and other contentious topics from legitimate media outlets, apparently in an attempt to amplify U.S. schisms, according to research published Wednesday by the threat intelligence firm Recorded Future. The news was real but often outdated by years, and links to the international news organizations were cloaked under URL shorteners to make the headlines appear current. The activity dates back to May 2018.

The campaign, which Recorded Future calls “Fishwrap,” marks a subtle evolution in information operations because, by posting news about actual events, the accounts are not clearly violating any terms of service and therefore have been able to avoid a general suspension, the company said. Researchers did not attribute the activity to a specific country or organization, saying only a nation-state is likely behind the operation.

“Even though ‘fake news’ has become highly associated with influence operations, in many cases ‘real news’ is also used, but carefully selected to emphasize the opinions the operation wishes to foment,” wrote researcher Staffan Truvé.

All the shortened links posted by the accounts trace back to 10 web domains hosting URL services, as well as features that allow administrators to track the reach of their campaign. Each domain was registered anonymously, and they all appear to share the same code. A list of recently shortened links on two of the sites, pucellina.com and bioecologyz.com, includes articles with headlines such as “What do I do if I don’t fit in with my family?” and “Police see mental illness behind deadly German van attack,” from April 2018.

That’s where the trail ends, though Truvé speculates a political organization “with an intent to spread fear” is responsible, based on the amount of coordination and money dedicated to the campaign.

One tweet cited by Recorded Future Wednesday was posted on March 23 by @Footballbabe71, a still-active account with 13 followers at press time, reporting “Bombings, Shootings Rock Paris.” The attached link brought visitors to a URL posted in 2015 about the ISIS attacks in Paris, France. That account posted multiple times every hour with very little, if any, engagement with other users.

One tweet published Wednesday morning directed users to a CNN story dating back to 2016.

https://twitter.com/footballbabe71/status/1138789333295665152

Another account identified in the research, @BLMsupprttheblc, purportedly from Oakland, sent a series of tweets Wednesday morning directing users to 2017 stories about white supremacist Richard Spencer speaking throughout the U.S.

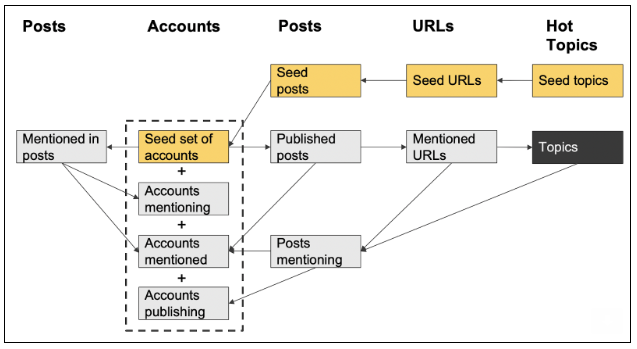

There are two examples of seed accounts that Recorded Future says play a role in its so-called Snowball algorithm. That refers to a techique that propaganda networks use to boost engagement in their posts. The structure is demonstrated in a graphic the company published Wednesday.

Recorded Future demonstrates the methodology of what it calls a Snowball algorithm.

Twitter did not respond to a request for comment Wednesday.

The company typically examines accounts’ behavior, rather than the content they spread, when considering whether a “user” actually is part of a coordinated influence campaign. It assess whether one account could be linked to a larger network by analyzing the tweets, timing, IP addresses, and other factors, Del Harvey, Twitter’s vice president of trust and safety said in March at the RSA security conference.