Russian AI-generated propaganda struggles to find an audience

As generative artificial intelligence tools have become more widely available, many researchers and officials within the U.S. government have grown concerned that they might be used to power high-volume disinformation campaigns.

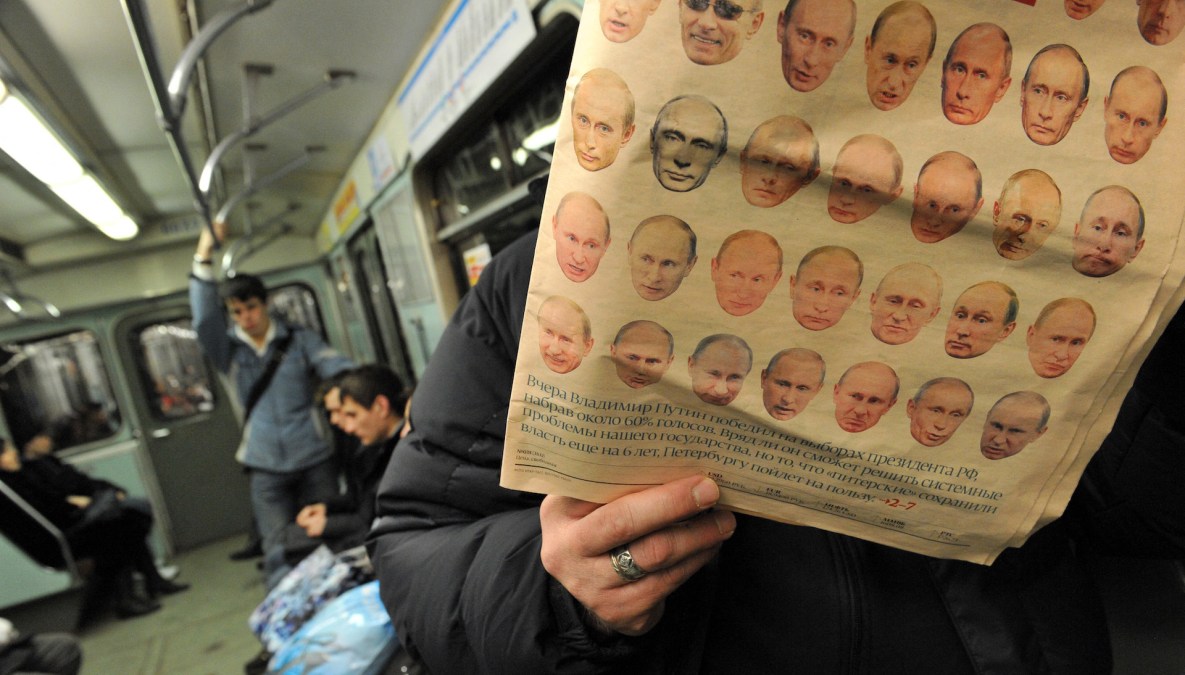

Russian and Chinese propagandists, the thinking goes, might use generative AI tools to create fake images and articles to spread their messaging and erode public confidence in mainstream media and some shared notion of the truth. A year after the launch of ChatGPT sparked the current generative AI craze, Beijing and Russia are indeed beginning to experiment with these tools in their propaganda campaigns.

What’s less clear is whether content generated by AI for propaganda purposes is reaching audiences.

In a report released Tuesday, researchers at Recorded Future revealed that a Russian propaganda group known as “Doppelganger” appears to have stood up a fake news outlet and used generative AI to write articles with a generally anti-Western, pro-Russian slant. Dubbed Election Watch, the website poses as an English-language news outlet and summarizes negative news articles featuring President Joe Biden, such as his struggles to convince Congress to provide additional funding for Ukraine and his dropping levels of support among Arab Americans amid his staunch support of Israel’s military campaign against Hamas.

It’s difficult to determine the exact reach of that content, but there’s little reason to think that this obscure website is breaking through to American audiences or that AI-generated Kremlin propaganda is having any meaningful impact on the beliefs of American readers.

“The reach of the AI-generated content was negligible,” said Brian Liston, a threat intelligence analyst at Recorded Future’s Insikt Group.

As part of their report, Recorded Future’s analysts examined social media campaigns to spread the content posted by Election Watch, which was one of three bogus news operations created by the group known as Doppelganger. “Despite the number of social media accounts posting Doppelganger articles, at most we were only seeing a handful or so of views per post … and even fewer engagements,” Liston said.

To be sure, Recorded Future’s analysts could not definitively determine that the Election Watch content was AI-generated, but the material posted on the site scored high on AI-detection tools and bore some hallmarks of AI-generated content.

Doppelganger is not alone in using AI-generated content as part of its propaganda efforts. According to a September report from Microsoft, China has deployed AI-generated images as part of its propaganda efforts. These images, Microsoft’s analysts noted, have “drawn higher levels of engagement from authentic social media users.”

But increasing user engagement using generative AI doesn’t quite deliver on fears that the technology will spread propaganda to vastly larger audiences — at least not yet.

In a major report released earlier this year, a consortium of researchers concluded that AI-generated misinformation was set to transform how nation states deploy digital propaganda. By increasing the volume and scale of disinformation, AI-generated content represented a step-change in how governments seek to influence online audiences.

While nation states are indeed embracing AI-generated content, getting that content to break through to mainstream audiences is another challenge all together. Doing so probably requires gaining traction on platforms with large audiences. But those platforms, such as Facebook, have grown more sophisticated at monitoring state-backed propaganda campaigns.

Earlier this year, Meta described Doppelganger as “the largest and most aggressively persistent covert influence operation from Russia that we’ve seen since 2017.” But that campaign does not appear to have reached large audiences on Meta platforms. In a report released last week, Meta analysts said that they haven’t seen the phony news outlets described in the Recorded Future report “get much amplification by authentic audiences on our platform.”

Nevertheless, Doppelganger’s experimentation with artificial intelligence provides an example of how state-backed propaganda groups might use generative AI in the future. As AI tools improve and as state-backed propaganda efforts continue to experiment with them, the quality of content might improve and become more compelling. The social media content used to promote them might improve and allow these campaigns to break into the mainstream.

So far, however, that doesn’t seem to be happening.