Deepfake laws emerge as harassment, security threats come into focus

A new flurry of state and federal legislation that aims to better understand the creation of doctored video and audio files — and help victims respond — couldn’t have come soon enough, analysts say.

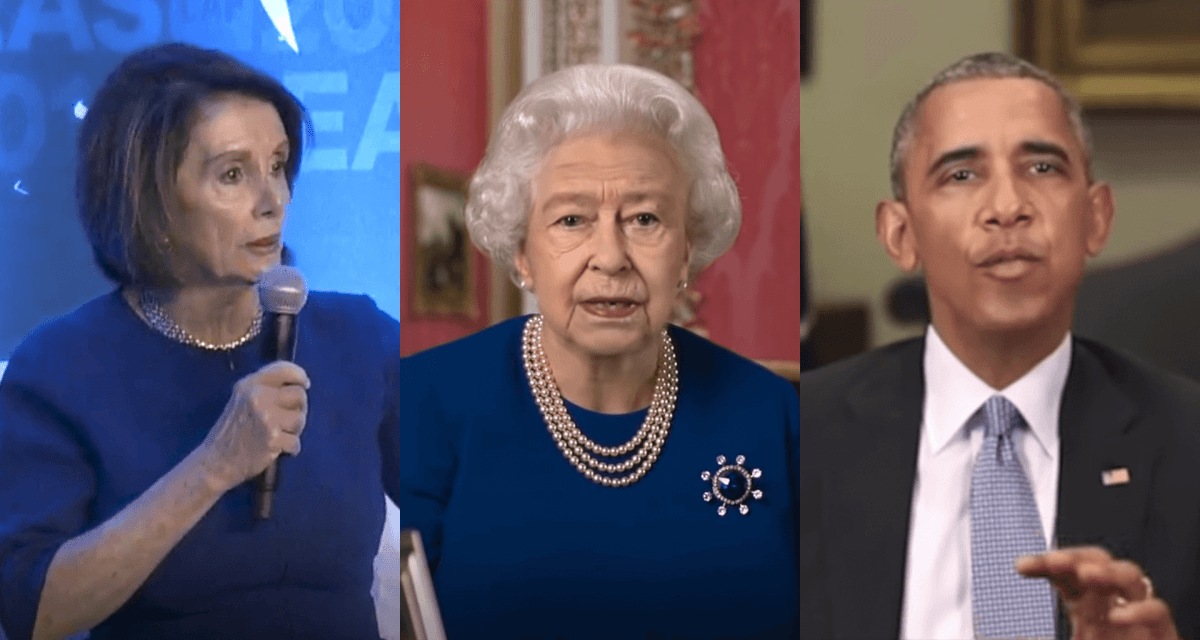

The manipulated content, better known as deepfakes, has been used to falsely portray House Speaker Nancy Pelosi as ill or inebriated in a video that went viral in 2019. Other examples include a faked video of former president Obama, and an artificial intelligence service that has been enabling users to transform photos of women into nude pictures, enabling abuse, blackmail and other kinds of harassment.

Potential malicious uses of deepfakes include fraud, inciting acts of violence or sowing political unrest. Last week, several Trump supporters proposed on Parler that Trump’s concession speech may have been a manipulated video.

The chatter is only more evidence that the existence of deepfakes, and the lack of truly effective screening mechanisms, allows people to doubt truth, or re-affirm their belief in conspiracies. It’s an issue that the U.S. government now is trying to research, in part because of the potential national security threats that come along with an erosion of trust.

Britain’s Channel 4 demonstrated the credibility of deepfakes at Christmas, when it broadcast a video manipulated to appear as if Queen Elizabeth was addressing the nation.

Most of the federal legislative efforts focus on research into understanding deepfake technologies, whereas the state-level laws that have been cropping up in recent years are more focused on providing recourse to victims.

The National Defense Authorization Act of 2021 directs the Department of Homeland Security to produce assessments on the technology behind deepfakes, their dissemination and the way they cause harm or harassment. The NDAA also directs the Pentagon to conduct assessments on the potential harms caused by deepfakes depicting members of the U.S. military.

The Identifying Outputs of Generative Adversarial Networks (IOGAN) Act, which President Trump signed in December, directs the National Science Foundation and the National Institute for Standards and Technology to provide support for research on manipulated media.

Rep. Adam Schiff, D-Calif., Chair of the House Senate Intelligence Committee, previously warned that foreign adversaries could use deepfakes to spread disinformation or stoke existing political divisions. The NDAA seeks to address such concerns, and includes a provision about assessing how foreign governments and proxies use deepfakes to harm U.S. national security.

States are stepping up their attention on the issue, as well. California, Virginia, Maryland and Texas have all produced legislation in the last two years on deepfakes meant to provide victims with avenues for recourse. New York Governor Andrew Cuomo became the latest to sign a deepfake proposal into law in November.

Many of the states’ legislation focus on pornographic instances of deepfakes, which can cause emotional and psychological harms, violence, harassment and blackmail. Deepfake porn used to target one journalist in 2018, for instance, resulted in her hospitalization for heart palpitations.

While some of these damages can’t be undone, the thinking goes that allowing victims of deepfake porn — which makes up approximately 95% of deepfakes — to sue could offer relief.

Some of the laws, like in California, open the aperture to deepfake cases in the election space, and aim to provide political candidates pathways to sue in the case that deepfakes depicting them emerge within 60 days of elections.

The recent wave of deepfake laws came in advance of the storming of the Capitol, but the growing interest is a step in the right direction towards regulation, according to Matthew Ferraro, who currently serves as counsel at the Washington law firm WilmerHale advising clients on matters related to national security and cybersecurity.

The existing legislation on Capitol Hill does not focus necessarily on regulating deepfakes. But Ferraro, a former CIA officer and current term member of the Council on Foreign Relations, suggested more stringent guardrails could be forthcoming.

“As the dark and deeply disturbing events of January 6 show, disinformation can have very real consequences,” said Ferraro. “It can poison minds and lead to delusions and violence … this is quickly becoming a regulated space where there’s going to be sufficiently good … laws — state and federal — that are going to impact people who create, use or disseminate deepfakes.”

The path ahead

Giorgio Patrini, the founder and CEO of Sensity, the company that recently uncovered the service offering nude deepfakes to users, said the current roadmap for victims of digital harassment is messy. It’s an environment that leaves a lot of room for abuse, as evidenced by an influx of arrests in Japan of suspects accused of distributing pornographic videos.

“Right now a takedown procedure needs to start from the victim (who is often unaware of the material being online) by sending a notice to the website,” Patrini said. “Instead, responsibility should be on the side of the platform [or] publisher to proactively police their spaces.”

Some research groups, including the Atlantic Council’s Digital Forensics Research Lab and Graphika, have been working with Facebook to identify when manipulated images are being used to lend authenticity to deceptive social media campaigns. One collaboration in December 2019 led to to a Facebook takedown of a deceptive pro-Trump social media campaign that used images generated by artificial intelligence to push polarizing content online.

Malicious actors that develop deepfakes are already likely working on bypassing what limited detection exists, according to a November assessment from Europol, the European Union’s police agency.

“One side effect of the use of deepfakes for disinformation is the diminished trust of citizens in authority and information media,” the report states, noting “one of the most damaging aspects of deepfakes might not be disinformation per se, but rather the principle that any information could be fake.”

Ideas currently gaining traction include finding ways to verify that images and videos are authentic, says Mounir Ibrahim, the vice president of strategic initiatives at Truepic.

Truepic works on evangelizing the idea of “provenance media” — the notion that it may be easier to label information that is truthful than it is to spot every single deepfake. His firm, which works with Adobe, Twitter, Qualcomm, WITNESS, the New York Times, the BBC and CBC/Radio-Canada to develop content attribution standards, likens its digital authentication technology to an “organic” label on organic bananas at the grocery store. In this case it would make it easier for users to identify whether a photo or video had been altered after it was created, Ibrahim says.

In the meantime, the recent congressional legislation pushing for more research on deepfake technologies is a welcome change, says Patrini.

“Fully automated detection of deepfakes still suffers technical limitations,” Patrini said. “Therefore public funding is helpful to the research community, both in academia and the industry.”