Far-right misinformation on Facebook outranks real news

Far-right misinformation shared on Facebook surrounding the 2020 presidential election received more engagement than real news, according to research published by New York University Wednesday.

“Far-right sources designated as spreaders of misinformation had an average of 426 interactions per thousand followers per week, while non-misinformation sources had an average of 259 weekly interactions per thousand followers,” the researchers said in a blog post on their findings.

Overall, misinformation authors on the far right received 65% more engagement per follower than other pages, according to the research.

Real news was more popular than misinformation in every other partisan news category examined, according to the study — for the slightly right, center, slightly left and far left, real news outranked misinformation on Facebook. Misinformation outranked real news only on the far right, according to the research.

The NYU researchers analyzed posts between August 10 of last year and January 11 of this year, using NewsGuard, Media Bias Fact Check and Facebook’s CrowdTangle tool. The study lacks information about how many people laid eyes on a piece of content and spent time reading it, because Facebook does not share that information, the group said. It was instead more able to analyze reactions, shares and comments based on information Facebook makes public.

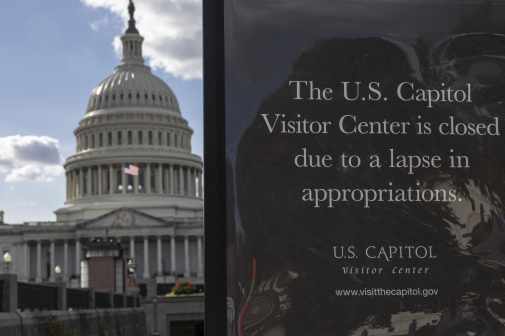

The study comes as researchers, policymakers and platforms continue to examine how misinformation can spill over into the physical world in the aftermath of the Capitol insurrection, which was spurred on in part by misinformation shared online that the election was stolen from Donald Trump.

The release of the study comes the same day a group of misinformation experts working at Graphika, the Atlantic Council’s Digital Forensic Research Lab and academic institutions released a nearly 300-page report on the misinformation and disinformation that circulated around the 2020 presidential election and the spillover effects of misinformation in the physical world. The report, published by the Election Integrity Partnership (EIP), concludes that misinformation alleging that President Joe Biden stole the election from Trump fed directly into the Capitol insurrection on January 6.

The EIP report concludes that partisan media outlet accounts, along with right-leaning verified influencers, were among the main purveyors of such misinformation. The EIP research examined posts on Facebook, like the NYU research, but also analyzed posts from other platforms as well, such as Twitter, YouTube, Instagram, Pinterest, Nextdoor, TikTok, Snapchat, Parler, Gab, Discord, WhatsApp, Telegram and Reddit.

The EIP noted that although many platforms developed and implemented policies surrounding moderation around the election-related content, such as through suspending or downgrading content, their use was inconsistent and unclear. Even then, smaller and hyper-partisan platforms were often “less moderated or completely unmoderated,” the EIP found.

“The 2020 election demonstrated that actors — both foreign and domestic — remain committed to weaponizing viral false and misleading narratives to undermine confdence [sic] in the US electoral system and erode Americans’ faith in our democracy,” the report notes. “Mis- and disinformation were pervasive throughout the campaign, the election, and its aftermath, spreading across all social platforms.”

Chris Krebs, the former director of the Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency (CISA), said a number of things need to happen to pull the U.S. out of its current rut with disinformation.

“There needs to be a counter disinformation czar. I know everybody is tired of too many czars but this is really a cross-agency, cross-platform issue,” Krebs said while speaking at an Atlantic Council event on the misinformation report Wednesday. He also said, “We need to work to define the roles and responsibilities across government across industry, across civil society … what are the levers available to each of them and what the gaps and where do we plug those gaps?”

Trump fired Krebs from his role at CISA for his statements that the election had been secure and for countering Trump’s misinformation claims.

“Third and finally, [we need] rumor control as a service,” said Krebs, who previously ran CISA’s “Rumor Control” page that debunked misinformation around the election. “Disinfo, info operations in general — it’s part of the bag of tricks of an expanding number of state and non-state actors.”

The EIP recommended that social media companies delve deeper into their role in allowing or helping propagate misinformation on their platforms by investing into research on their policy decisions; increasing the amount of data they share on takedowns or interventions; and clarifying consequences for policy violations.

The EIP also recommended that state and local officials develop channels for communicating with citizenry about elections, and suggested that Congress and the federal government iron out rules on disinformation disclosures and related legislative proposals moving forward.

Areas for future research include examining how far-right authors engage more with their readers and whether Facebook algorithms contribute to the misinformation problem.

“How do far-right news publishers manage to engage more with their readers, and why does the misinformation penalty not apply to that category?” The NYU researchers ask in their blog post. “Further research is needed to determine to what extent Facebook algorithms feed into this trend, for example, and to conduct analysis across other popular platforms, such as YouTube, Twitter, and TikTok. Without greater transparency and access to data, such research questions are out of reach.”