To win the internet, the Pentagon’s info ops need more humanity and a dash of absurdity

Earlier this year, researchers at internet analytics firm Graphika and the Stanford Internet Observatory revealed the existence of a five-year influence operation that encapsulates the difficulties the U.S. government faces in covertly winning hearts and minds online. This campaign — that U.S. Central Command reportedly orchestrated — attempted to spread pro-U.S. messages and targeted audiences in the Middle East and Central Asia via the creation of false personas, the use of memes and phony independent media outlets.

In its apparent attempt to run a Russia-style info op, CENTCOM failed. In addition to exhibiting relatively unsophisticated tradecraft mimicking Russian operations — and possibly skirting the military’s own standing protocols — the operation was perhaps most notable for what it wasn’t: effective. Researchers assessed that the Pentagon’s own overt social media messaging had more engagement than the operation did by orders of magnitude.

Six years after Russian messaging targeted the 2016 U.S. election and reawakened the U.S. government to covert influence operations and political warfare, it still hasn’t figured out how best to approach this domain. Its recent failures — and other, more successful activities messaging campaigns — provide an opportunity for American info warriors to reassess doctrines and to place truth, transparency and dedication to democratic values at their heart. But to be successful online, American info ops need not only improved oversight — they need to make room for a little absurdity, too.

What Graphika and SIO revealed about CENTCOM’s apparent five-year campaign represented something of an “I told you so” moment for students of U.S. counterinfluence and counter-disinformation efforts. As Ambassador Dan Fried and Dr. Alina Polyakova warned in their sweeping report on disinformation from 2020, “We must not become them to fight them.” By embracing tactics of obfuscation and inorganic amplification, countries such as the United States risk undermining “the values that democracies seek to defend, creating a moral equivalence (one that would bolster the cynical arguments of Russian propagandists about democracy being mere fraud),” Fried and Polyakova argued.

In their view, there’s another, more practical reason for Western states to avoid covert information operations: “If the history of the Cold War is any guide, democracies are no good at disinformation.”

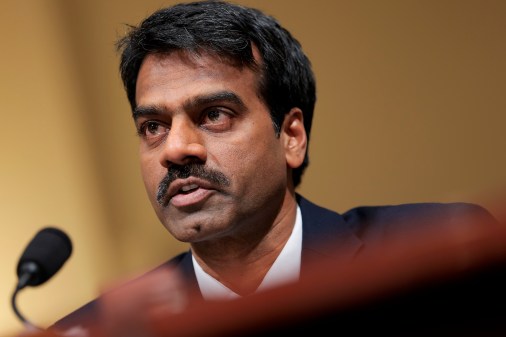

U.S. defense officials are working to make American information operations more sophisticated, but they have a long way to go. Last month, the Joint Staff updated its doctrine on information operations, or what the military dubs “operations in the information environment.” But beyond intending to break down parochial notions among the military services and arrive at a unified conception of information operations, not much is publicly known about the update. After the U.S. operation targeting the Middle East and Central Asia became public, Undersecretary of Defense for Policy Colin Kahl reportedly ordered a review of all online psychological operations, which was supposed to be due last month.

Whether the Joint Staff’s doctrinal update will solve the basic definitional problems that have long bedeviled U.S. information operations remains to be seen. “We haven’t decided yet what is or isn’t information operations, information warfare, cyberspace operations, operations in cyberspace that enable information operations … is it about cognitive operations, beliefs and understanding and motivations for operations?” Retired Vice Admiral TJ White wondered earlier this year. “We just haven’t yet decided.”

Even the Department of Defense’s overt, “by the book” operations have a mixed record of success. For example, an apparent Cyber Command foray into the meme wars in October 2020 proved bureaucratically inept and stylistically cringeworthy, as an attempted contribution to online culture collided with the realities of military culture. A cartoon bear designed to accompany an public advisory regarding Russian malware reportedly required four weeks of review and ultimate sign-off by a brigadier general — somewhat ironic for a command that has rigorously sought to cut the red tape around operations in cyberspace.

So, in thinking about what U.S. information operations should look like, would-be information warriors in government would be well-served studying what works in online messaging.

At their core, successful information operations capture attention, play on existing biases, consolidate factions, and catalyze them to action. Consider the online phenomenon that is the North Atlantic Fella Organization. In short, NAFO involves a bunch of dogs in fatigues on Twitter, relentlessly punking a stuffy Russian diplomat for his shameless lying. Opportunistic while altruistic, spontaneous and uncoordinated, NAFO rode the momentum of a cultural touchstone. It was high-minded, yet low-brow, an unaffiliated, pro-Ukrainian support movement drawing inspiration from a long-running internet meme featuring Shiba Inu pups and their trademark facial expressions. Western heads-of-state, legislators, and even the Ukrainian defense minister all hopped on the bandwagon.

To those who came of age on the self-seriousness of Tom Clancy novels, NAFO might seem patently ridiculous. But, as CyberScoop’s Suzanne Smalley outlined earlier this month, therein lies the source of its power. Former Marine Matt Moores, the co-founder of the group, leveraged the absurdity of a senior Russian official “replying to a cartoon dog online,” to demonstrate a profound and galvanizing truth: When you, Mr. Ambassador, reach that point in the debate, “you’ve lost.”

These episodes raise questions as to whether the bureaucratization and outsourcing of online operations — by whichever doctrinal moniker one prefers — by the DOD might be precisely what dooms many of them to inefficacy. Both military officers and government contractors are naturally going to attempt amplification, automation or manipulation of online phenomena like NAFO. However, they risk trading valuable tax dollars and irreplaceable credibility for a fraction of the impact that comes for free to those willing to ride the zeitgeist and tap into something with inherent social resonance — rather than trying to create that resonance from scratch.

By dropping the pretense of big government and attempting to be genuine — and perish the thought, human — America’s would-be information warriors might capture “hearts and minds” in a way even the most programmatic efforts likely cannot. The researchers at Graphika and SIO who outed the U.S. government’s latest attempt to sway public opinion echoed this recommendation, urging that as DOD pursues a more proactive posture in cyberspace, its efforts “focus on exposing those adversarial networks with radical transparency and winning hearts and minds with an underutilized weapon: the truth.”

Some within the U.S. government seem to be growing more adept at harnessing the idioms of the internet in trying to reach audiences. The Department of Homeland Security, for example, seems to have far more success raising awareness of disinformation using “Pineapple on Pizza” than with a formally constituted governance board. Cybersecurity and Infrastructure Security Agency Director Jen Easterly seems to gain more traction among the hacking community with Rubik’s cubes and knit beanies than she likely would with a brochure and a binder. Even Rob Joyce, the National Security Agency’s director of cybersecurity, is using memes to communicate, wading into the esoteric meme pool and finding the water just fine.

On the one hand, the approach is unorthodox. On the other, the current orthodoxy seems to have veered from the principles of truth, transparency and democratic values the U.S. government ought to be defending — undermining the message by overthinking the delivery.

Gavin Wilde is a Senior Fellow in the Technology and International Affairs program at the Carnegie Endowment for International Peace. He previously worked as a managing consultant for the Krebs Stamos Group, a cybersecurity advisory, and served as a director on the National Security Council staff. The views expressed here are his own.

Correction: An earlier version of this article incorrectly attributed who was reportedly responsible for an influence operation targeting audiences in the Middle East and Central Asia. It was attributed to United States Central Command, not Cyber Command.