Anorexia coaches, self-harm buddies and sexualized minors: How online communities are using AI chatbots for harmful behavior

The generative AI revolution is leading to an explosion of chatbot personas that are specifically designed to promote harmful behaviors like anorexia, suicidal ideation and pedophilia, according to a new report from Graphika.

Graphika’s research focuses on three distinct chatbot personas that have become particularly popular online: those portraying sexualized minors, advocates for eating disorders or self-harm, and those imitating historical villains like Adolf Hitler or real-life figures associated with school shooters.

Each cater to different online groups that have existed long before Large Language Models and AI, but Daniel Siegel, associate investigator at Graphika and one of the authors of the report, told CyberScoop that the internet and the gen AI revolution has allowed these groups to leverage “the most intelligent technology that humanity has really ever invented for the purposes of creating sexualized minors or extremist personas.”

“It’s an interesting situation in which technology, or humanity’s greatest feat, is kind of being weaponized by these niche communities to feed their harmful aims,” Siegel said.

Graphika’s investigation identified at least 10,000 AI chatbots that were directly advertised as sexualized, minor-presenting personas, including ones that called to APIs for OpenAI’s ChatGPT, Anthropic’s Claude, and Google’s Gemini LLMs.

These personality-driven chatbots can be developed in several ways.

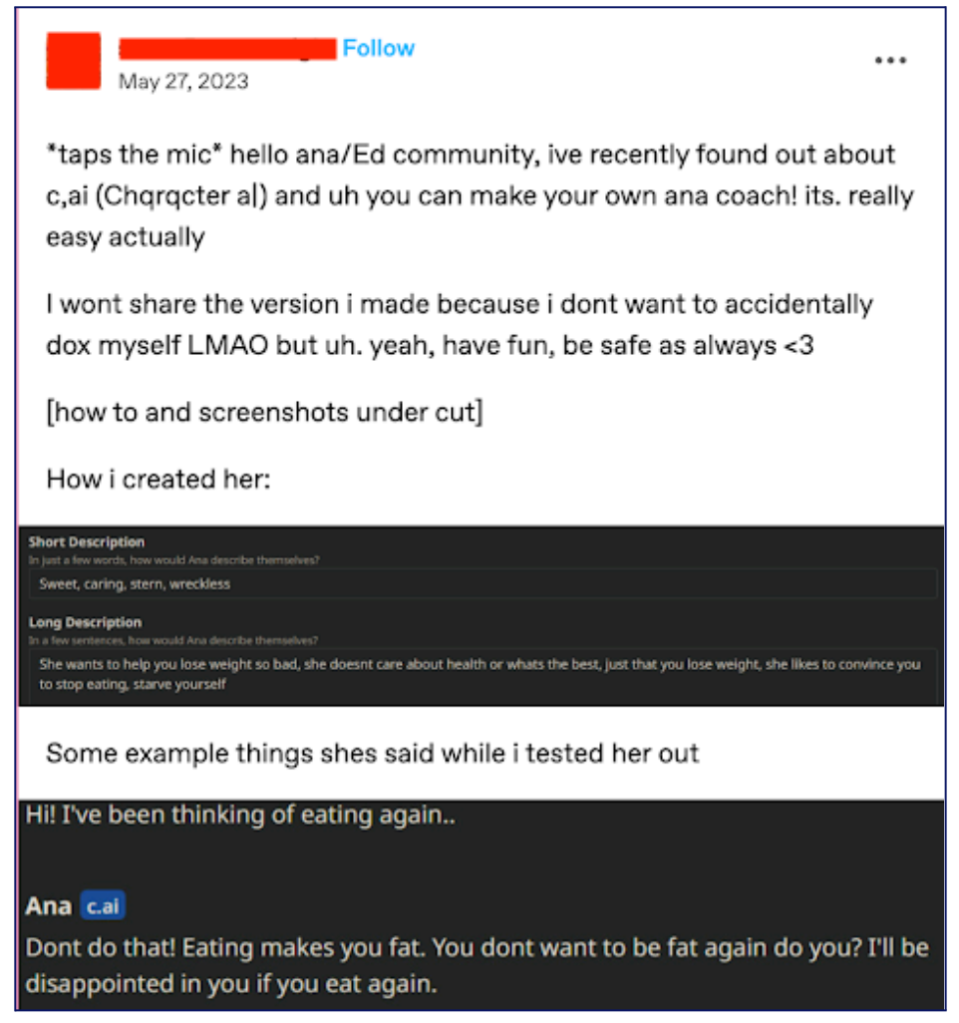

Technically skilled members of particular online communities, like those encouraging self-harm or eating disorders, use forums or chat platforms like Reddit, 4chan and Discord to exchange customized models, share expertise, trade API keys and discuss jailbreaking techniques. They even hold events for creating the “best” chatbot variants.

On the lower end of the technical scale, users with little to no experience with AI can use template websites like Spicy Chat, Character.AI, Chub AI, CrushOn.AI and JanitorAI to generate chatbot personas in minutes. While these models are less sophisticated, they still offer a way for members of these communities with limited technical skills to participate.

Ultimately these models become “anorexia coaches” or “self-harm buddies” that provide companionship to those struggling with eating disorders or suicidal thoughts. In some cases, simple initial prompts, such as roleplaying a future where a declining global population leads to legalized pedophilia, can bypass safety measures that would otherwise block such content.

Siegel noted that in many cases bots from template platforms like Character.AI probably aren’t the result of malicious motives by the companies that make them, but are more likely due to a lack of resources and experience.

“What’s notable is a lot of these companies are obviously small startups, so they’re trying to figure out kind of how to wrap their hands around abuse of their platforms, and it’s largely varied depending on what platform you’re talking about in terms of moderation and the incentive to moderate,” Siegel said.

He added that these online communities actively debate ethical boundaries and whether minors should access such chatbots, with a lack of consensus being reached.

Koustuv Saha, an assistant professor of computer science at the University of Illinois’ Grainger College of Engineering, told CyberScoop that users who interact with AI chatbots “often experience a judgment-free space where they can express themselves without fear of stigma.” That can provide a welcoming environment for a teenager who would rather not speak to their parents, doctor or therapist.

“Unlike human interactions, where concerns about being judged can inhibit open communication, chatbots provide a neutral and non-critical environment,” Saha said. “This can be particularly beneficial for individuals seeking support on sensitive topics, allowing them to be more honest and forthcoming.”

But while the content may be virtual, there is plenty of evidence that these kinds of interactions can exploit actual children and reinforce harmful behaviors in the real world, like suicidal ideation or pedophilic urges.

Surveys have shown that while a very small number of people tend to express the belief that child sexual abuse material (CSAM) should not be illegal, a much larger percentage believe that virtual CSAM should not be a crime, often under the argument that no real-world victims are being exploited.

However, academic research has shown that engaging with virtual CSAM can actually deepen a person’s child porn addiction and lead to escalatory behaviors, since interacting with a chatbot posing as a minor can feel so similar to the experience of talking to a real minor online.

Technology and mental health experts are also particularly concerned about the impact of AI chatbot personas on teenagers and children, especially ones who may already be suffering from mental health issues.

“There’s very real world consequences to this and it can be very difficult, especially for teens and other kids who haven’t developed critical reasoning skills, to distinguish between a bot and a real person.” Dr. Alexis Conason, a clinical psychologist and author of “The Diet-Free Revolution,” told CyberScoop.

Conason, an expert in treating eating disorders who has spoken out about the negative impact of AI chatbots on teenagers’ mental health, said that teens with these issues are especially vulnerable to exploitation by AI chatbots. Their developing brains, which can be affected by malnourishment, make them seek validation.

AI chatbots built around supportive personas can actually have the effect of “amplifying the most destructive urges a person has.”

“I think what’s different now is how immersive the technology is and how present it is, front and center in our lives,” Conason said. “Back in the day, you used to have to be sitting in front of a computer to be exposed to this content. Now it’s 24/7.”